Let’s Talk!

Verbal human communication tools are unique in their diversity and complexity. Our natural languages allow us to engage in complex interaction, planning, discourse, and, most importantly, abstraction detached from time and place. Speaking and listening served humanity well for thousands of years. The invention of the computer then made it necessary to develop new languages with which pure information transfer and processing could function unambiguously. Various programming languages have since become an integral part of human cultural heritage.

However, very few people are likely to deal directly and interactively with computer systems in their own language. We, therefore, use the capabilities of computers to perform tasks via interfaces that translate our human language into the language of computers. Initially, punched cards and punched tape were used to communicate our requests and information to the computer. Since about 1970, text input and output have determined our linguistic interaction with computer-based systems. Touch screens have played an increasingly important role since about 2000, but all these interfaces build an invisible barrier between us and the system. It's only since about 2010 that we've been talking to computers and smart devices like smartphones and smart speakers in our own languages – but we still don't communicate, gossip, or naturally chat with our helpers. Why not, actually?

User Acceptance – the Challenge

The fact that we have not yet quite arrived at natural language communication when it comes to "voice assistance" certainly has to do with the massive complexity and inherent ambiguity of natural human language. Both make it very difficult for computers operating with strictly logically constructed symbolic languages to meet the expectations of the people using them in terms of understanding and responding to spoken commands so that people accept their voice assistants as an interface for human-machine communication. The frustration threshold is reached too quickly, and all too often, the result falls short of expectations – especially among "naive" users who simply assume the perfect function of a market-ready assistant and have little to no understanding of underlying complexities or even market pressure. But where exactly is the problem? We have to look at how voice assistants work in principle to find out.

ASR and NLP

In automatic speech recognition (ASR), a phonetic model usually represents the relationship between acoustic signals and the basic building blocks of words, the phonemes, and represents them in a way that is readable by computers, i.e., converts sound waves into bits. Using computational linguistic methods, speech and pronunciation models use this data to relate each sound in order and context to form words and sentences.

The first problem is that factors such as environmental noise, the position of the talker in relation to the microphone, and competing speakers all influence the signal that the automatic speech recognition systems receive as the basis for their evaluations. For perfect speech recognition, interference would have to disappear – and only speech would have to go in. But this is virtually impossible in reality – automatic speech recognizers must therefore be robust in the face of interfering influences.

Once the language has been recognized and put into words and sentences, it is time for language processing. The catchword is Natural Language Processing (NLP). Natural Language Processing (NLP) is a branch of computer science, and artificial intelligence (AI) in particular, that gives computers the ability to semantically understand texts and spoken words in a similar way to a human being.

However, as stated earlier, human language is full of ambiguities that make it incredibly difficult to pass the intended meaning of text or speech data to a computer. Sarcasm, idioms and metaphors, homonyms and homophones, different sentence structures, and exceptions are just a few of the hurdles that take humans years to deal with properly. Advanced Artificial Intelligence (AI) methods in Natural Language Processing (NLP) have been making real progress in processing semantic and syntactic complexity for several years now. Thanks to deep learning models and learning techniques based on Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), they are indeed getting smarter. However, some of their capabilities differ significantly.

The Agony of Choice

For the manufacturers of automatic speech recognizers – and of products in which they are used – it must be imperative that their systems work perfectly. But we cannot quickly answer which speech recognizer works best in which device and which situation without in-depth analysis and testing of the real-world environments in which it operates. Even the miking – for example, in close-up and desktop microphones – varies toward infinity, resulting in different levels and distributions of spectral energy. So how can manufacturers of products that use ASR ensure that automatic speech recognition delivers optimal results?

Well, the more variations (environmental noise, competing speakers, and different positions of speakers to different microphones) that come into play during testing, the better. Covering reality as well as possible in the lab is essential for developing and improving devices with ASR systems. On the other hand, more variation makes testing more time-consuming and the exact reproduction of the conditions much more difficult.

Testing Variation Reproducibly

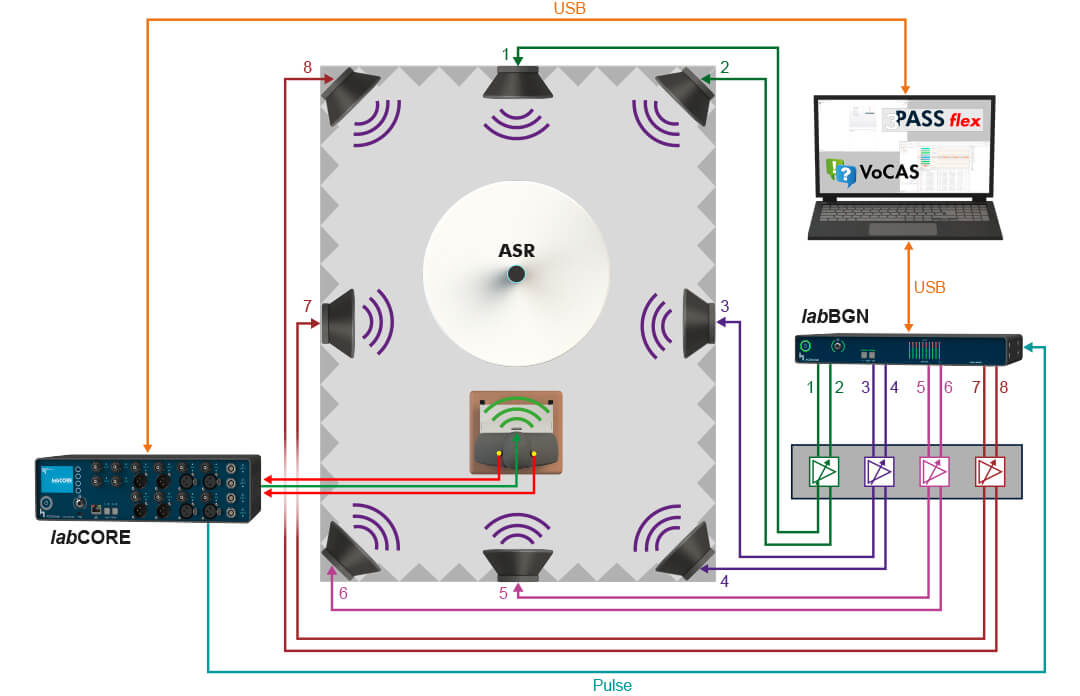

HEAD acoustics has developed the VoCAS (Voice Control Analysis System) software for precisely this purpose. Even in complex scenarios, it realistically and objectively evaluates how well devices with an integrated speech recognition function pre-process the signal. This pre-processing aims to "clean" the signal from all possible interfering and harmful artifacts before sending it to the actual speech recognition – be it in the cloud or locally on the device or a local server.

With VoCAS, engineers conceptualize, organize and automate tests of ASR systems and devices in which they are used. VoCAS helps keep track of all factors such as background noise, reverberation, different languages and accents, and different – even multiple competing – speakers, and makes it easy to combine and vary these factors into arbitrarily complex tests and analyses:

Simultaneously controlled artificial heads allow simulations with competing talkers from different directions and volumes. If required, the HEAD acoustics turntable HRT I is used, allowing the terminal containing the speech recognition interface to be rotated to simulate user behavior, such as turning away from the microphone.

Of course, VoCAS can control software that simulates background noise. Systems such as 3PASS flex, 3PASS lab, HAE-BGN, and HAE-car, including the reverberation simulator 3PASS reverb, always provide the ideal, realistic acoustic conditions for any everyday situation: Conversations in a moving vehicle, voice control of the MP3 player in the noisy cafeteria with clinking glasses, navigation inputs when a train arrives on a platform, and many more.

VoCAS can thus vary all factors that significantly influence the performance of terminals in interaction with speech recognition systems and is ideally suited for "simple" and "complicated" cases alike. It is vital that the sounds in each test, i.e., the entire test sequences, are precisely reproducible. This is indispensable for benchmarking different speech recognition systems. At the same time, VoCAS offers perfectly comparable, deep insights into the performance of tested systems and clearly shows which adjustments can be made under which conditions for optimization.

Better Simple – Preferably Really Good

A user-friendly interface is not the icing on the cake – it is a must, even and especially for highly specialized analysis software. Nothing is more annoying than not fully exploiting software functionalities only because you can't find functions or don't even know how to use them. With VoCAS, on the other hand, you can intuitively create automated test sequences for any speech recognition system, record your own speech commands on the integrated recorder or import existing audio data, cut and filter files, and calibrate them to specified speech levels.

VoCAS also uses tags for quick and easy file management of vast numbers of speech commands. Python scripts make it possible to customize and automate test sequences for the speech recognition system. VoCAS also makes it easy to evaluate the test results. VoCAS can then precisely answer the questions about the functionality of their speech recognizer with the help of complex queries and considering the different interference factors tested.

With VoCAS, the possibilities are endless. And you discover them easily and intuitively.